The Rise of Security Surveillance Cameras: Balancing Safety and Ethics

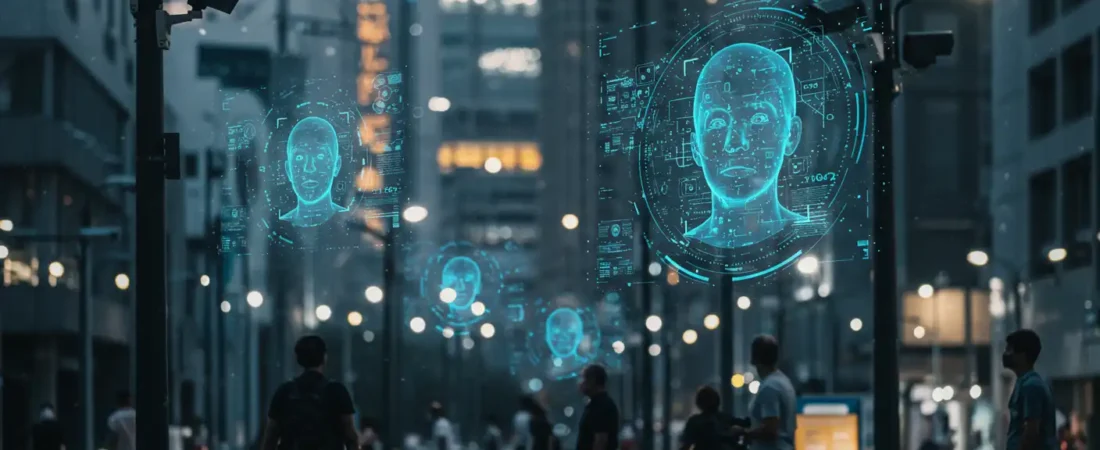

Security surveillance cameras are everywhere in airports, shopping malls, workplaces, and even residential neighborhoods. While their original purpose was simply to capture footage for later review, today they are tightly integrated with surveillance artificial intelligence and facial recognition ethics debates that raise profound concerns about privacy, human rights, and democracy. The promise of safety comes with the price of digital exposure. For homeowners, home security cameras offer peace of mind, but for entire societies, mass deployment of surveillance raises questions about consent, fairness, and freedom.

This article explores the expanding role of security surveillance cameras, the ethical dilemmas of facial recognition, and how future governance might strike a balance between protection and digital rights.

Security Surveillance Cameras and Their Expanding Role

Historical Evolution and Transformation

Security surveillance cameras have been around for decades, but their evolution is staggering. Early models were grainy, analog CCTV systems that stored footage on tapes. They were “passive” technologies, used mostly for forensic review after an incident occurred. In the 2000s, the shift to digital cameras and IP networking meant that footage could be transmitted live and stored more securely in cloud servers.

The biggest transformation, however, came with the integration of AI-powered surveillance. Instead of merely capturing video, modern cameras can interpret it in real time. Algorithms detect patterns, recognize license plates, count crowds, and even identify suspicious body language. According to industry reports, over 770 million surveillance cameras are currently deployed worldwide, and a significant portion already incorporates artificial Intelligence (Statista).

Role in Modern Cities

Urban centers rely heavily on security cameras to maintain order. Cities like London, Beijing, and New York are blanketed with cameras linked to central monitoring systems. Police forces argue that cameras reduce petty crime, vandalism, and even terrorism attempts. For example, in the UK, footage from cameras was critical in tracking suspects after the 2005 London bombings.

But this ever-watchful eye has consequences. Citizens may feel safer, yet they also live under constant observation. The question arises: at what point does safety turn into surveillance overreach?

The Shift from Passive to Proactive Surveillance

The transition from passive CCTV to proactive surveillance artificial intelligence is profound. Instead of merely recording for evidence, cameras now make judgments in real time. Smart systems can flag unattended bags, identify individuals from watchlists, and even predict potential threats based on behavioral analysis.

While these capabilities enhance public safety, they also create risks. Misidentification can lead to wrongful detentions, especially in communities already subject to disproportionate policing. According to the American Civil Liberties Union (ACLU), “the use of facial recognition in policing amplifies racial bias, misidentifies people of color, and risks false arrests” (ACLU Report).

Everyday Integration of Surveillance Artificial Intelligence

It’s not only governments using these technologies. Home security cameras are now mainstream. Products like Ring and Nest offer homeowners live feeds, visitor alerts, and artificial intelligence recognition of packages or faces. For many, this is a leap in personal safety, but it comes with privacy trade-offs.

There have been cases of hackers gaining access to private cameras, as well as partnerships between private companies and law enforcement that allow police access to neighborhood camera networks without warrants. This blurs the line between private and public surveillance.

Security Surveillance Cameras, Facial Recognition Ethics, and Human Rights

The ethics of facial recognition go far beyond convenience and security. They intersect with democracy, human rights, and personal freedom. The core dilemma is this: should governments and corporations have the right to identify and track individuals in real time without their consent?

Bias, Accuracy, and Accountability

AI facial recognition systems are not neutral. Research by the MIT Media Lab has shown that these systems consistently perform worse on women and people with darker skin tones. Some have error rates up to 35% higher for minority groups compared to white males (MIT Media Lab Study).

The consequences are severe. Several cases of wrongful arrests in the United States have been linked directly to faulty artificial intelligence facial recognition matches. Without robust accountability frameworks, citizens may face consequences for errors made by opaque algorithms. Who takes responsibility: the software company, the government, or the camera operator?

Consent and Transparency

Another critical ethical issue is consent. In private spaces, like homes, people choose to install cameras. But in public areas, individuals rarely consent to being scanned by security surveillance cameras. Few cities disclose the extent of their surveillance, let alone how data is stored, analyzed, or shared.

Europe’s GDPR requires explicit justification for biometric data collection, but in countries without such laws, surveillance often operates unchecked. This lack of transparency undermines trust and fosters fear among citizens.

Security Surveillance Cameras with Artificial Intelligence, Digital Rights, and Future Governance

Growing Global Divide

The deployment of surveillance AI is not uniform. Democratic societies wrestle with balancing safety and civil liberties, while authoritarian governments often use it for political control. In China, mass facial recognition is integrated into the “social credit system,” where citizens’ actions are scored and linked to privileges or punishments.

By contrast, the European Union has proposed bans on real-time facial recognition in public spaces. This highlights a global divide: some regions prioritize individual digital rights, while others prioritize state control.

Balancing Security and Freedom

This perfectly encapsulates the ethical tension. Citizens want safety, but not at the cost of freedom. In smart cities, AI-connected security surveillance cameras log every movement. While this deters crime, it also creates a society that stifles freedom of expression.

Toward Responsible AI Security Surveillance Cameras

Responsible use of surveillance AI requires regulation, oversight, and public involvement. Possible frameworks include:

- Mandatory third-party audits of facial recognition systems.

- Clear expiration dates for data retention.

- Bans on use in certain contexts, such as protests.

- Transparent reporting requirements for governments and corporations.

The EU’s AI Act is one example of how governance might evolve, setting standards for ethical AI deployment.

Conclusion

The rise of security surveillance cameras, facial recognition ethics, and surveillance AI is transforming how societies function. These tools promise safety and convenience but also threaten privacy and digital rights. The challenge lies in finding the balance: using technology responsibly while protecting democracy.

👉 “As facial recognition and surveillance AI grow, your digital rights matter more than ever. Stay informed, demand transparency, and support ethical Artificial intelligence initiatives to ensure security without sacrificing freedom.”